The Inherent Weaknesses of the AD Scientific Index

The AD Scientific Index (ADSI) has garnered attention for providing a detailed, data-driven evaluation of researchers worldwide. It ranks millions of researchers globally (or "scientists," as ADSI refers to them), based on profiles primarily from the Google Scholar database. ADSI's extensive listing aims to capture the scholarly contributions of researchers across all fields. Researcher profiles are evaluated alongside their institutional affiliations, meaning institutions with high-profile researchers generally rank higher. However, several weaknesses within ADSI's methodology and the self-managed nature of Google Scholar profiles bring its accuracy into question. Some inaccuracies include the following; there are names with Google Scholar profile but are not included in the ADSI list, some have associated other's publication as theirs, some have manipulated profiles to increase number of citation and h-index.

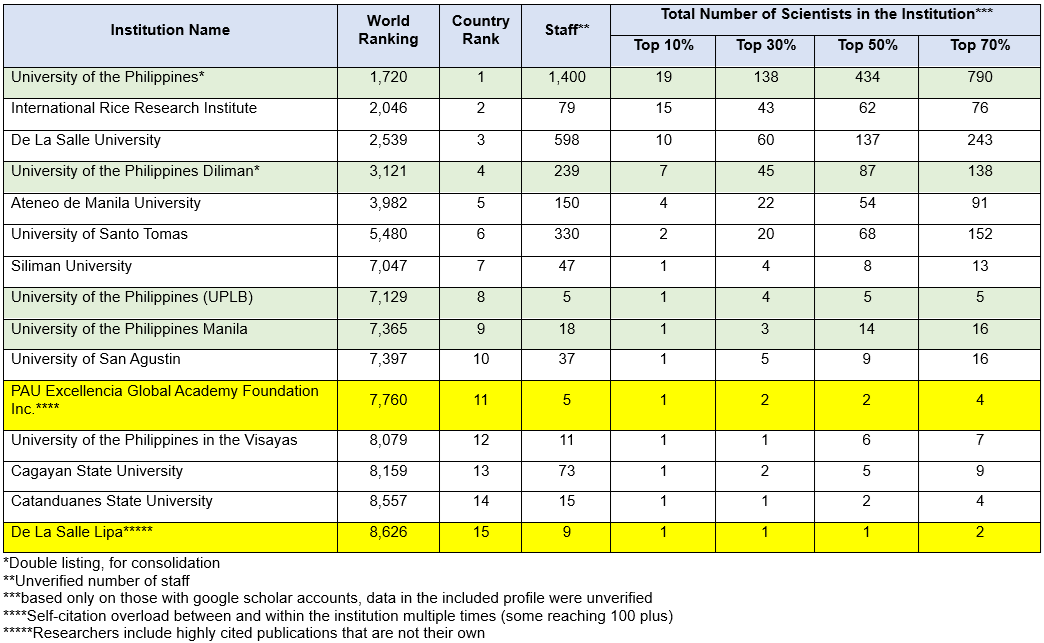

Shown in the table are the rankings of the Institutions in the Philippines. While some of the reported institutions contains legitimate profile and numbers, some just don't add up. The staff recorded were not verified, some needs to be removed, and some are bogus, or profile contains bogus claims or manipulated citations.

While there are also bogus names in the list, there are also those that were not included in the list. Upon examination, some researchers with publication indexed or included in the Google Scholar has no associated profile, thus they are not included in the ranking. If these names are included and the ADSI index, there is a sure change in the ranking and the profile of the institutions included in the index.

Self-Setup of Profiles and Potential for Inaccuracies

One critical weakness of ADSI is that researcher profiles on Google Scholar are self-constructed, allowing researchers to potentially misrepresent their scholarly work. For instance, individuals can inadvertently or intentionally include publications not authored by them. Such errors can arise from the system's misclassification, human oversight, or intentional manipulation. Researchers or institutional staff could knowingly adjust profiles to improve personal or institutional ranking. Since institutions may be unaware of these errors or manipulations, inaccuracies can persist in ADSI rankings, ultimately distorting the perceived research output and quality of both individuals and institutions.

Institutional Misattribution

ADSI’s ranking of institutions sometimes aggregates personnel profiles inaccurately due to researchers’ inconsistent listing of institutional affiliations. For example, in the Philippines, the AD Scientific Index includes a consolidated rank for the "UP System" while separately listing individual campuses. However, without clear differentiation, profiles from one campus might be inaccurately attributed to the entire UP System, affecting campus-specific counts and skewing rankings. This aggregation problem may also misrepresent individual campuses' performance if profiles are not properly segregated.

Dubious or Inaccurate Profiles

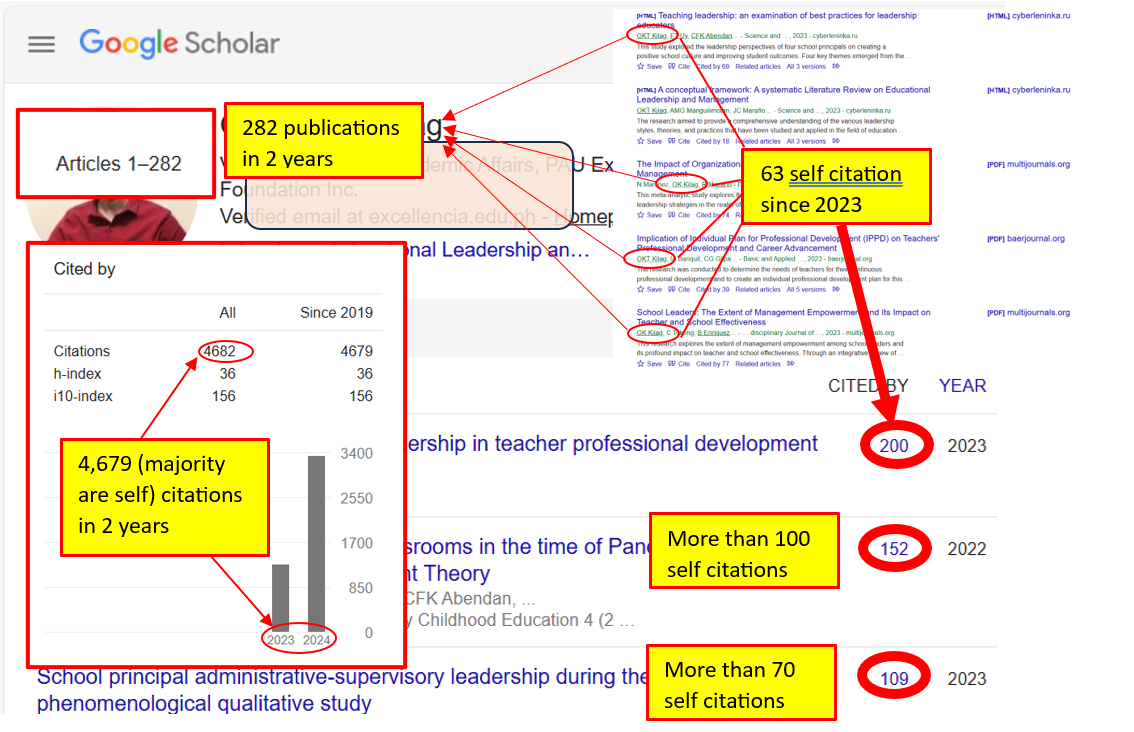

Instances of dubious profiles further complicate the ADSI rankings. Profiles of actual researchers may display publications they did not author, leading to artificial inflation of their citation counts and research impact. An example in one university, some listed staff have erroneous profiles. Despite having only nine listed researchers, the institution ranks highly because some of their staff appear among the top 10% globally. In one instance, a researcher’s profile lists a top-cited paper that does not actually belong to them, boosting the institution's rank inaccurately.

Intentional Manipulation through Self-Citations and Predatory Publishing

A more concerning issue is intentional manipulation of citation counts through self-citation practices and publication in low-quality or predatory journals. For example, some researchers may publish extensively in unknown or low-quality journals, citing their own or colleagues' work multiple times to boost citation metrics. This practice artificially inflates h-indices and other parameters ADSI relies on for rankings. Self-citation can be valid in niche fields, but excessive self-citations are questionable and could mislead about a researcher's true impact.

Global Relevance and Recurrence of Issues

These weaknesses are not unique to the Philippines but are prevalent globally, impacting the accuracy of ADSI’s institutional and individual rankings. Without intervention from institutions and researchers to rectify profile inaccuracies, ADSI's ranking system will likely continue to face criticism.

Literature on ADSI Weaknesses and Potential Corrections

Academic discussions around ADSI highlight similar concerns regarding self-managed researcher profiles, reliance on self-citation, and susceptibility to predatory journals. Studies suggest a more centralized, institutionally controlled validation process for profiles to mitigate these issues. Literature also recommends using verified databases like Scopus and Web of Science alongside ADSI to provide a cross-referenced view of research impact, reducing the sole reliance on Google Scholar.

Recommended Precautions for Effective Use of ADSI

To make ADSI a more useful tool for evaluating academic institutions and researchers at the national and regional levels, the following precautions are recommended:

Institutional Monitoring and Verification: Institutions should implement internal processes to regularly review and verify their researchers’ profiles, ensuring accuracy in listed publications and affiliations. This approach helps reduce self-misrepresentation and corrects errors in Google Scholar profiles.

Use as a Supplementary Tool: ADSI should be used in conjunction with other citation databases such as Scopus and Web of Science. This multi-database approach enables more comprehensive insights into research output and impact, reducing the influence of potential manipulations on ADSI rankings alone.

Guidelines for Self-Citation Practices: Institutions should provide clear guidelines regarding acceptable levels of self-citation and collaboration, particularly in cases where researchers publish in niche fields. Encouraging ethical citation practices can curb excessive self-citations and improve ranking integrity.

Inclusion of Non-Indexed Quality Journals: For countries and institutions with quality publications in non-indexed journals, it may be necessary to recognize these contributions separately. This practice allows a more balanced evaluation while acknowledging high-quality research that ADSI or Google Scholar may overlook.

Regular Review and Auditing by Regulatory Bodies: For national use, entities like the Commission on Higher Education (CHED) in the Philippines should periodically audit ADSI and similar indices, providing universities with guidelines on using and interpreting these rankings.

While ADSI has limitations, it remains a valuable tool for tracking researchers' output, particularly when internal databases are lacking. With vigilant verification, ADSI data can still monitor progress among researchers within institutions and serve as a reference point. National and institutional agencies, like CHED, can cautiously employ ADSI data alongside Scopus, considering corrections for more comprehensive research evaluations. When used with awareness of its weaknesses, ADSI can continue to inform academia on research trends and performance globally.